Table of Contents

Introduction

Today we will like to introduce one of the first inner training chapters in which we introduce the fundamentals of DataScience treatment tools. We are talking about Pandas, Numpy and Matplotlib. Pandas is a third-party library for numerical computing based on NumPy. It excels in handling labeled one-dimensional (1D) data with Series objects and two-dimensional (2D) data with DataFrame objects.

NumPy is a third-party library for numerical computing, optimized for working with single- and multi-dimensional arrays. Its primary type is the array type called ndarray. This library contains many routines for statistical analysis.

Matplotlib is a third-party library for data visualization. It works well in combination with NumPy, SciPy, and Pandas.

Creating, Reading and Writing Data

In order to work with data we need to create coherent data structures to store it, or read them from an external source. Last but not least we need to save them after the modifications that we might have made.

The two fundamental data structures are Series and Dataframes. In order to simplify the concepts we could say that a Series is similar to python dictionary (key-value pair) and a dataframe is a matrix (two dimensional) with its corresponding rows and columns. We use Dataframe in case we have more than one value for each keyWe use Dataframe in case we have more than one value for each key.

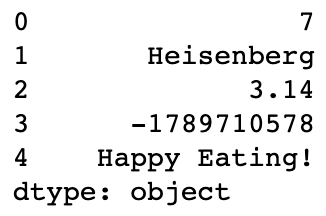

Creating Serie from scratchzero

Series is a one-dimensional labeled array capable of holding any data type (integers, strings, floating point numbers, Python objects, etc.). The axis labels are collectively referred to as the index. The basic methods to create a Series are:

A. From list:

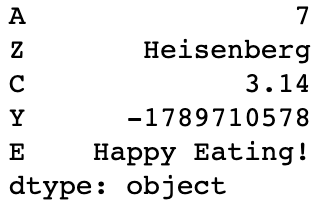

list = [7, 'Heisenberg', 3.14, -1789710578, 'Happy Eating!']

serie = pd.Series(list)

Index is like an address, that’s how any data point across the Dataframe or Series can be accessed. Rows and columns, in case of Dataframe, both have indexes, rows indices are called as index and for columns its general column names. We can specify the index this way:

list = [7, 'Heisenberg', 3.14, -1789710578, 'Happy Eating!']

index=['A', 'Z', 'C', 'Y', 'E']

s = pd.Series(list, index=index)

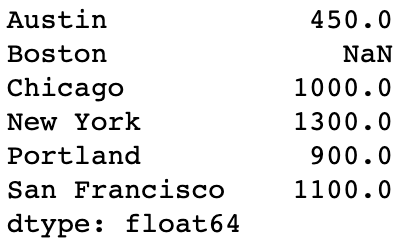

B. From dictionary:

d = {'Chicago': 1000, 'New York': 1300, 'Portland': 900, 'San Francisco': 1100,

'Austin': 450, 'Boston': None}

cities = pd.Series(d)

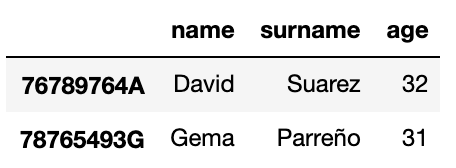

Creating Dataframe from scratchscracth zero

We can create Dataframe from different ways but three of the most used are:

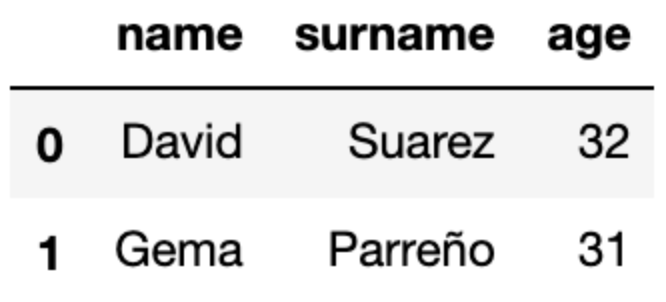

From dictionary

employees = pd.DataFrame([{"name":"David",

"surname":"Suarez",

"age":32},

{"name":"Gema",

"surname":"Parreño",

"age":31}], columns=["name","surname","age"])

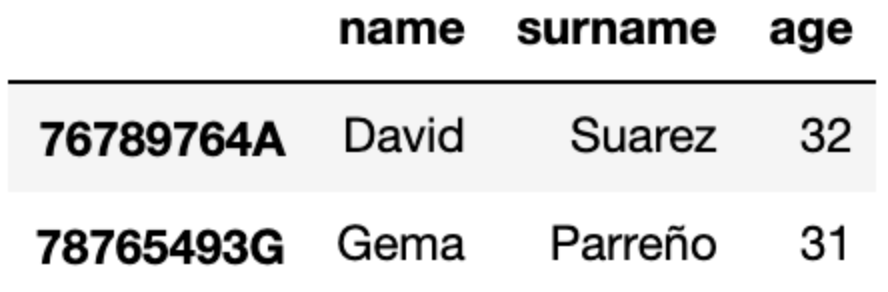

If we might like to name each row with a non numeric index, we might want to specify it in this attribute.

employees_by_dni = pd.DataFrame([{"name":"David",

"surname":"Suarez",

"age":32},

{"name":"Gema",

"surname":"Parreño",

"age":31}], columns=["name","surname","age"], index=["76789764A", "78765493G"])

From CSV

CSV (comma-separated values) file is a delimited text file that uses a comma to separate values. Each line of the file is a data record. Each record consists of one or more fields, separated by commas.

In order to read an external file in CSV format we can do it calling to read_csv method inside pandas:

Import pandas as pd

from_csv = pd.read_csv('./path/to/file.csv', index_col=0)We can specify with index attribute which one of the columns we want to be the row name.

Normally in CSV the first column is usually the index, that is, the address through which we can access all the information of each row (Example: get[0] would give us all the information of row 0, but it can also be a string get[‘david’]). We can specify with the index attribute, which of the csv columns we want to be the name of the rows, that is, the attribute by which we will then access all the information in the row.

from_csv = pd.read_csv('./path/to/file.csv', index_col=3)From PARQUET

Similar to a CSV file, Parquet is a type of file. The difference is that Parquet is designed as a columnar storage format to support complex data processing.

Parquet is column-oriented and designed to bring efficient columnar storage (blocks, row group, column chunks…) of data compared to row-based like CSV.

In order to read an external file in Parquet format we can do it calling to read_parquet method inside pandas:

Import pandas as pd

from_parquet = pd.read_parquet('./path/to/file.parquet)From JSON

In the case that the file has the external format as JSON:

from_json = pd.read_json('./path/to/file.json')Writing Dataframe

Once the data frame is created, we indeed have several forms of saving the info into an external file. And we can save it into CSV or JSON format. We shall use to_csv and to_json pandas method and save it with the corresponding extensions name:

df_to_write.to_csv("/path/to/file.csv")df_to_write.to_json("/path/to/file.json")Last, but not least, we can also cite that SQL formats can also be used from pandas to read and write to a database SQL.

Training your abilities

If you want to bring your skills further in Data Science, we have created a course that you can download for free here link.

Over the next chapter, we will get a deep dive into the marvellous world of indexation, selection and assignation. We also will give an overview about filtering and data transformation with pandas.