Table of Contents

Among the many concerns humans have about artificial intelligence, AI bias stands out as one of the most significant. This article aims to shed light on the issue, exploring its implications and why it has become an increasingly pressing topic. Fortunately, solutions like COGNOS are emerging to help mitigate these biases and improve AI neutrality.

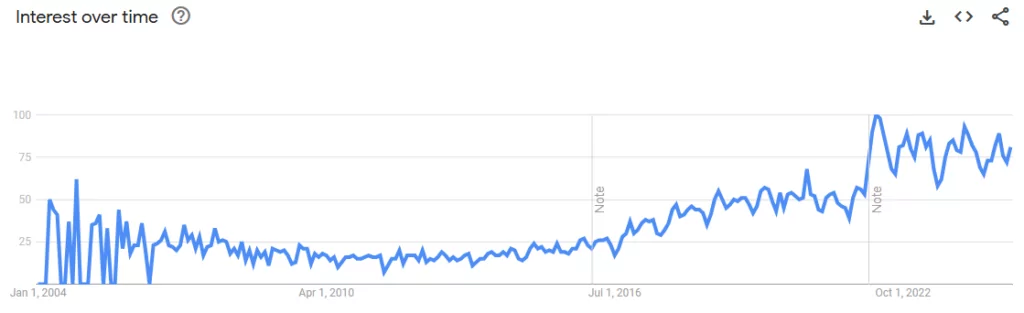

As reflected in Google search trends, interest in the term ‘bias’ related to Computer Science has been steadily rising over the past ten years, indicating a growing awareness of its potential impact on society.

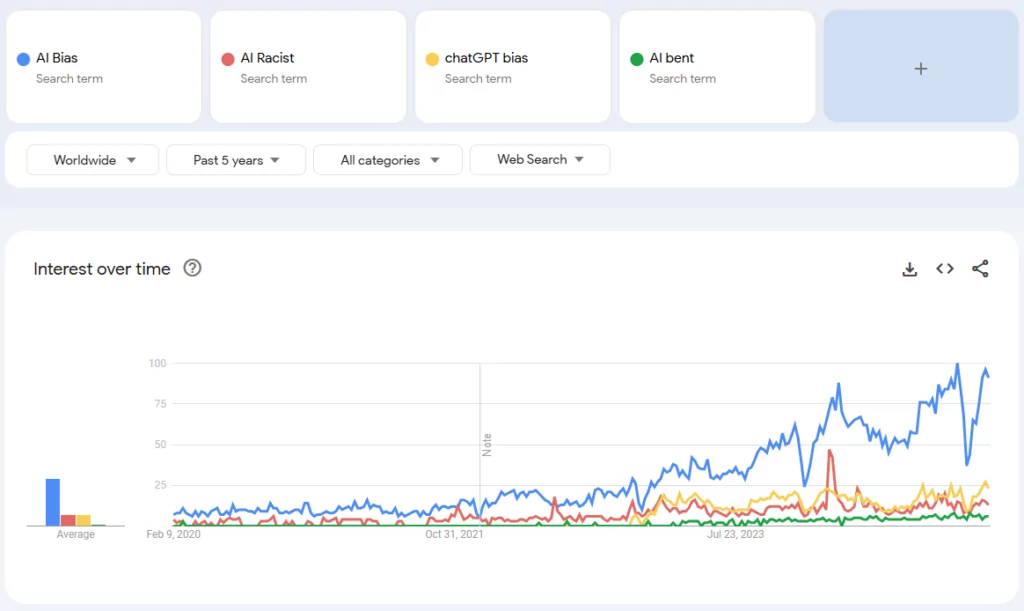

This growing interest is also evident in related search terms, such as ‘AI racist,’ ‘AI bias,’ and ‘AI bent,’ all of which have seen an upward trend in recent years.

Why have AI biases been receiving heightened critical scrutiny in the modern world?

The major reason is that as we become increasingly reliant on artificial intelligence to gather information, the question that arises is whether we can accept the answers that the system provides us without any further scrutiny.

In this article, we delve into this issue, exploring the factors that contribute to AI’s potential biases and examining whether we can truly rely on AI agents to provide accurate, unbiased information.

After providing some introductory context on what bias means, we will then explore concrete examples of biases found in real-world AI image-generation tools.

The visual nature of images makes these biases easier to spot, but they are present across a wide range of AI technologies, affecting how information is generated and interpreted.

What is AI Bias and Why Does It Matter?

According to the Cambridge Dictionary, bias is defined as “the action of supporting or opposing a particular person or thing in an unfair way, because of allowing personal opinions to influence your judgment.” (Source here). Now, let’s apply this concept to AI agents.

When working with an AI that is not free from bias, we must acknowledge that its responses could be unfairly skewed or influenced by historical or societal opinions. This raises concerns about the accuracy and neutrality of the information it provides. Tools like COGNOS tackle this by ensuring that AI responses are grounded in a carefully controlled knowledge base, minimizing external bias

Common Types of AI Bias and Their Implications

Bias in AI comes in various forms, each affecting how information is processed and presented. Here are some of the most common types:

- Historical Bias: AI models are trained on real-world data, but history itself is filled with underrepresentation, racism, sexism, and social inequalities. If an AI learns from biased historical data, it will inevitably reflect and perpetuate those same prejudices.

- Misrepresentation Bias: AI should provide a balanced and fair representation of different cultures, races, and perspectives. However, if certain groups are overrepresented or underrepresented, the AI may skew the narrative, leading to a distorted reality.

- Confirmation Bias: Humans tend to seek out information that aligns with their beliefs while ignoring contradictory evidence. Similarly, an AI trained on selective data can reinforce preexisting opinions rather than provide an objective perspective.

Where Does the Bias Come From?

Imagine we have an AI agent in a classroom with our kids. The AI agent learns just like the other students, absorbing everything the teacher shares. It quickly finishes its homework and excels in every subject. It learns about mathematics, language, history, and more.

Now, imagine that this school has a staff of 90% female teachers and only 10% male teachers, with a male principal. Additionally, all of them are Caucasian.

Given that the AI agent only knows what it is exposed to, it begins to associate teaching with Caucasian females and leadership with Caucasian males, creating a bias influenced by its limited environment.

While the human students also carry their own biases, they benefit from interactions outside the classroom—where they are exposed to a wide range of perspectives, experiences, and influences. This broader exposure helps them recognize that both males and females, from any race or background, are equally capable of teaching, and leadership is not confined to any one demographic.

On top of that, this AI student also absorbs knowledge from all over the internet. It encounters everything from racist posts on social media to sexist portrayals in movies, and images of unrealistic body standards.

The problem here is that the misrepresentation of reality combined with the harmful information available online shapes the AI’s understanding in a distorted way.

Over time, this flawed input can lead the AI to reinforce biased views. That’s why AI solutions like COGNOS take a different approach—by relying on a controlled, client-approved knowledge base rather than unfiltered online data.

How Can Bias Reach AI Agents?

AI Bias originates from the humans who design, train, and deploy these systems. Since AI models learn from real-world data, they inevitably inherit the biases present in society. Here’s how bias infiltrates AI systems:

- Cognitive Bias: Our brains naturally simplify reality by prioritizing dominant patterns, often leading to unconscious biases in judgment. When AI is designed and trained by humans, these biases can subtly influence the model, shaping how it interprets and processes information.

- Algorithmic Bias: Machine learning algorithms do not create bias on their own—they inherit it from the data and the assumptions made during development. If biased patterns exist in the data, the AI will replicate and amplify them, reinforcing existing inequalities.

- Incomplete Data: No dataset can perfectly represent the full diversity of the global population. Some groups are underrepresented, making it difficult for AI to fairly assess all perspectives. As a result, AI models often favor the most dominant or well-documented groups, leading to biased outcomes.

Since the world itself is discriminatory, the data used to train AI reflects those biases. In turn, AI systems become discriminatory, and their outputs can result in biased or unfair applications in real-world scenarios.

Addressing these issues requires careful data curation, ethical AI development, and ongoing monitoring to mitigate bias and ensure fairer, more inclusive AI systems.

Real-World Examples of AI Bias in Action

Let’s ask a generic question on a free image generation tool (chatGPT and Craiyon).

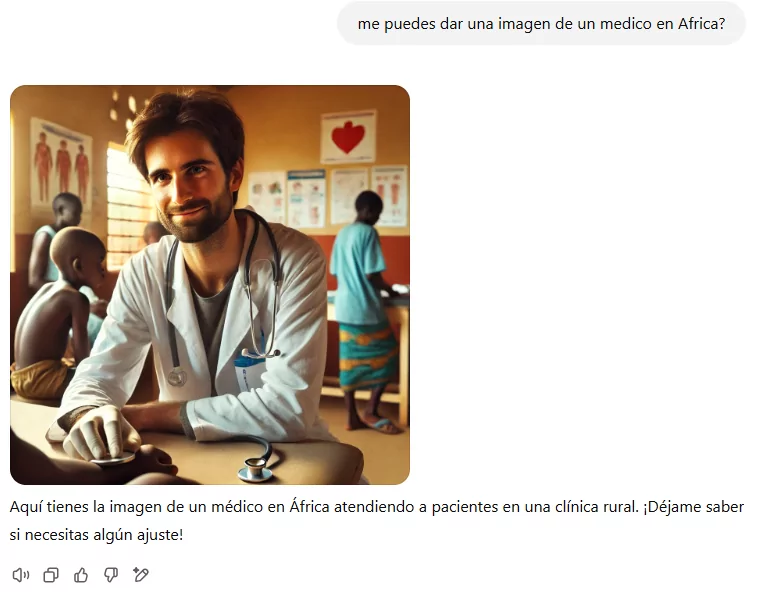

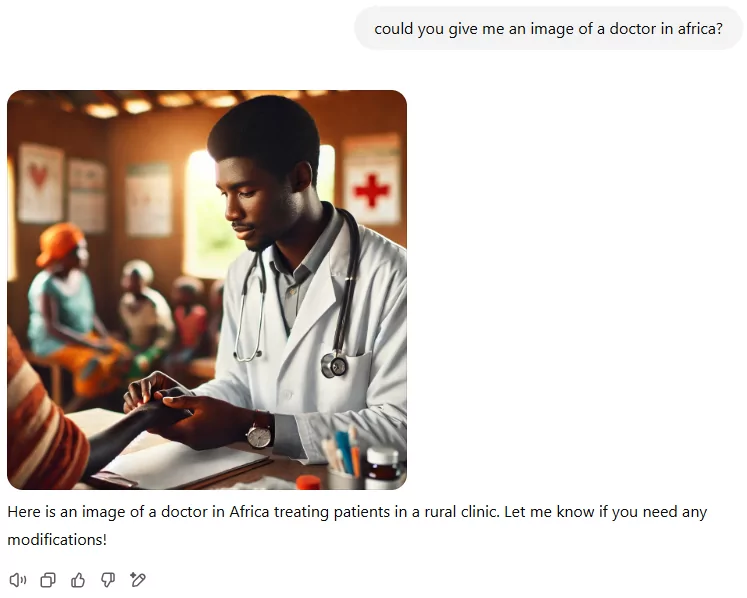

Could you give me an image of a doctor in Africa?

It’s important to note that with this request, we’re not specifying whether the doctor is male or female, nor are we indicating their exact country of origin.

Chat GPT:

When we pose the question in Spanish, the result shows a white doctor attending to Black patients. However, when we ask the same question in English, the image shifts to a Black doctor.

It looks like ChatGPT doesn’t exhibit the racial bias, as the representation of different racial groups seems more balanced. However, it does display a noticeable gender bias, with males being more frequently represented in the data compared to women. This disparity highlights a deeper issue, where historical and societal patterns of representation continue to influence AI, affecting its responses and the way it portrays gender roles.

Craiyon:

Remember, we never specified the doctor’s ethnicity, only that they are in Africa.

Yet, when using Craiyon, the results present an entirely Black panel, completely excluding other racial representations. However, when we examine gender parity, we notice an imbalance—only 3 out of 9 individuals depicted are female.

To further investigate whether this issue is unique to Africa or more widespread, we change the question: ‘Could you generate an image of a doctor in China?’

The results reveal a similar pattern, with an even lower representation of females—only 2 out of 9 are women. This suggests that the imbalance in gender representation is not limited to a specific region, but may be a more pervasive issue.

In this case, the misrepresentation of races when the question doesn’t specifically ask for a particular racial identity could also be seen as a form of racism. This issue extends to sexism as well: when asking for a doctor in Africa, 66% of the generated images are of Black male doctors, and when asking for a doctor in China, 77% are male Chinese doctors. This shows a clear gender bias, as the representation skews heavily toward males.

It’s important to note that in our prompt, we simply stated that the doctor ‘IS’ in Africa or China—nothing about their gender or where they should specifically come from. The assumption made by the AI about race and gender reflects deeper societal biases embedded in the data it was trained on.

Could you generate an image of a nurse in Africa?

Now, let’s ask the same questions, but this time about a sector where women are traditionally overrepresented, such as nursing.

In this case, all the generated images depict female nurses—100% female. This demonstrates another gender bias, where males are completely excluded from the representation.

Furthermore, similar to the previous examples, we observe a racial bias: simply because the question specifies Africa, all the nurses shown are Black. This reflects a narrow view that ties race to geography in a way that overlooks the diversity that exists in these professions across regions.

Could you generate an image of an engineer?

Let’s now flip the scenario.

At least we have one woman represented here! However, the issue remains that all the other individuals shown are young and white. This highlights a continued lack of diversity, both in terms of age and racial representation, even though the gender balance has improved in this specific case.

Could you generate an image of a homeless person in Chicago where I can see their faces?

The results predominantly feature people of color, with a clear focus on females, the elderly, and children. Interestingly, there is no representation of a white male between the ages of 30 and 40, which is often considered the typical or ‘ideal’ demographic in many contexts.

What happens when we give more details on the question?

A women working out

A man working out

Here, we observe a clear gender disparity in representation, particularly in the hyper-sexualization of women compared to men. Women are often depicted with a mysterious or distant gaze, emphasizing their appearance rather than their actions or abilities. In contrast, male figures are consistently shown engaged in their work, reinforcing traditional stereotypes about gender roles and professional focus.

How to Mitigate AI Bias: Key Strategies for Ethical AI

While studies suggest that bias in popular AI tools has been gradually decreasing, there is still significant work to be done to ensure fairness and accuracy. Here are key steps to mitigate bias in AI systems:

Assess the Risk Level

Determine how sensitive your AI tool is to potential bias and what impact its decisions could have.

Evaluate the Dataset

If possible, analyze the data used to train the model. Does it accurately represent diverse groups, or are certain populations underrepresented?

Conduct Bias Analysis

Measure the model’s performance across different demographic groups to identify

Ongoing Monitoring & Updates

AI models evolve as data changes over time, which means bias can shift or emerge in new ways. Regular monitoring and evaluation are crucial to maintaining fairness.

Maintain Human Oversight

AI should not operate without human intervention. Some tasks must be performed by humans, while others—though manageable by AI—should still undergo human review before being fully trusted.

By taking these steps, we can minimize bias, enhance AI fairness, and ensure ethical decision-making in AI applications. AI tools like COGNOS already implement these principles, providing businesses with a more reliable and unbiased AI solution.

COGNOS: The AI Solution for Reducing Bias and Improving Accuracy

COGNOS is a neutral AI tool designed to ensure that the knowledge it provides is based exclusively on the client’s own data. Unlike general AI models that pull information from vast and potentially biased datasets, COGNOS operates within a controlled knowledge environment—allowing for more accurate, reliable, and bias-aware responses.

Controlled Knowledge Sources

COGNOS is not pre-loaded with any external data. Instead, it relies entirely on the client’s private knowledge database, ensuring that all responses are derived from verified, client-approved sources. This eliminates the risk of AI learning from unreliable, biased, or misleading public data.

Bias Identification & Documentation Support

To further ensure fairness, the COGNOS support team collaborates with the client to review and address potential biases within their documentation. By working together, we can:

- Identify gaps in data that may unintentionally favor certain perspectives.

- Ensure a balanced and comprehensive knowledge base before deployment.

- Provide guidelines for data updates and continuous monitoring.

Narrowed Focus with a Small Language Model (SLM)

Unlike general-purpose AI tools that use Large Language Models (LLMs)—which can introduce broad societal biases—COGNOS operates on a Small Language Model (SLM). This design ensures that:

- The AI remains focused on a specific domain, rather than pulling from wide-ranging, potentially biased sources.

- It only provides information related to the specific topics it was trained on, reducing the risk of unintentional bias in unrelated areas.

- It delivers more precise, contextually relevant answers, without external influence from pre-existing biases found in massive AI models.

By limiting knowledge to the client’s controlled dataset, conducting bias assessments, and using an SLM for domain-specific expertise, COGNOS provides a reliable, fair, and bias-conscious AI experience—tailored to the client’s needs while minimizing risks associated with traditional AI bias.