Table of Contents

In today’s article, I’d like to focus on the Circuit Breaker pattern. Some background: In a previous article we talked about how to handle transient failures through the application of the Retry pattern. As a reminder, transient failures are those errors produced during a brief time period and get solved automatically.

The Retry pattern works well with these kinds of failures since we know (or we think we know) that they won’t happen again on a future call. However, there are times when these transient failures can become total failures. In a situation like this, the Retry pattern stops being useful and, in fact, might even make things worse by consuming critical resources. When facing an issue like this, it’s preferable to have the operation immediately fail so it can be managed by the system. It’d be also desirable that the service is only invoked again if there are chances of a correct result.

And here’s where the Circuit Breaker pattern enters the fray.

The Circuit Breaker Pattern

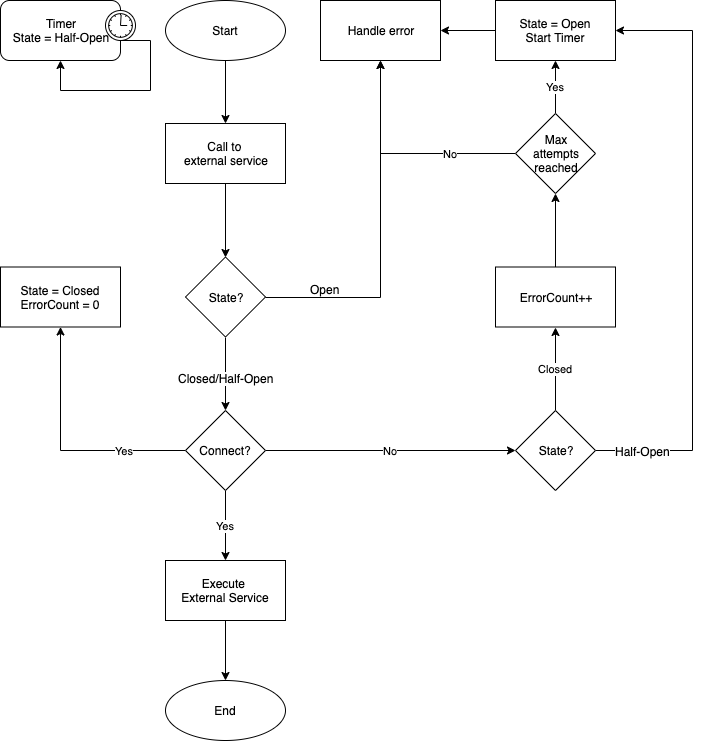

The Circuit Breaker pattern prevents an application from continuously attempting an operation with high chances of failure, allowing it to continue with its execution without wasting resources as long as the problem isn’t solved. Also, this pattern can detect when the problem has been solved so the compromised operation can be executed again. In a way, we can see this pattern as a proxy between our application and the remote service that’s implemented as if it was a state machine that mimics the behavior of a switch in an electrical network.

The states:

- Closed: The circuit is closed and the flux uninterruptedly flows. This is the initial state, everything’s working correctly, the application’s working according to our expectations and the call to service/resource is done normally.

- Open: The circuit is open and the flow interrupted. In this state all calls to service/resource immediately fail, meaning they’re not executed, thus returning the last known exception to the application.

- Half-Open: The circuit is half-open (or half-closed) giving the flow a chance for restoration. In this state the application will try to repeat the petition to the service/resource that was failing.

State changes:

As we’ve said, the initial state is Closed. The proxy keeps a counter with the amount of failures produced during the call. If this number of failures exceeds the one specified on the proxy’s configuration, it changes its state to Open and a timer gets started (this part is very important).

While the state is Open, there are no calls to service, automatically returning the last known error. How long the proxy stays on this state is determined by the timer‘s configuration.

When the timer ends its cycle, the state changes to Half-Open. In this state the call to service is available at least one more time so that:

- If the petition works correctly, it’s assumed the failure has been corrected, the failure counter is set to 0 again and the state is restored to Closed. Everything’s working correctly again.

- If, on the contrary, there’s an error during the petition, it’s assumed the failure is still there, the state is set back to Open and the timer is restarted. The service/resource is still unavailable.

This would be an example of a simple implementation in Kotlin:

@Throws(Exception::class)

fun run(action: KFunction0<String>) {

logger.info("state is $state")

if (state == CircuitBreakerState.CLOSED) {

try {

action.invoke()

resetCircuit()

logger.info("Success calling external service")

} catch (ex: Exception) {

handleException(ex)

throw Exception("Something was wrong")

}

} else {

if (state == CircuitBreakerState.HALF_OPEN || isTimerExpired) {

state = CircuitBreakerState.HALF_OPEN

logger.info("Time to retry...")

try {

action.invoke()

logger.info("Success when HALF_OPEN")

closeCircuit()

} catch (ex: Exception) {

logger.info("Fails when HALF_OPEN")

openCircuit(ex)

throw Exception("Fails when HALF_OPEN")

}

} else {

logger.info("Circuit is still opened. Retrying at ${lastFailure!!.plus(openTimeout)}")

throw Exception("Circuit is still opened. Retrying at ${lastFailure!!.plus(openTimeout)}")

}

}

}

Of course, implementations can be much more sophisticated. For example, we can do an implementation that increases the timer in relation to the number of times the petition fails while in the Half-Open state.

Just like the rest of the patterns, it’s very important to take into account that the complexity of the Circuit Breaker pattern’s implementation must answer our application’s real needs as well as the business requirements.

There are libraries that implement the Circuit Breaker pattern in an easy way, like Spring, where we’ll have the pattern implemented with just a couple of simple annotations.

@CircuitBreaker(maxAttempts = 3, openTimeout = 5000L, resetTimeout = 20000L)

fun run(): String {

logger.info("Calling external service...")

if (Math.random() > 0.5) {

throw RemoteAccessException("Something was wrong...")

}

logger.info("Success calling external service")

return "Success calling external service"

}

@Recover

private fun fallback_run(): String {

logger.error("Fallback for external service")

return "Succes on fallback"

}

As we can see, the implementation with this library is indeed very simple. In the example above we can see how the only thing we must do is add the @CircuitBreaker annotation on the method where we’ll do the petition.

We’ll set it up with the parameters:

- maxAttempts: max number of failed attempts before calling the restoration method.

- openTimeout: time period during which the max number of failed attempts must be attempted.

- resetTimeout: timer to go from Open to Half-Open state.

All that’s left is adding the @Recover annotation to the method that will manage the failure when our application is in the Open state.

This pattern provides stability and resilience to our applications and helps to avoid resource consumption that directly impacts our system’s performance.

Just like we did in our Retry Pattern article, we highly recommend you keep a register of all failed operations, since it can be a great help to correctly evaluate the size of a project’s infrastructure and to find recurring failures that are silenced by the application’s failure management.

You can find the complete examples that we saw in this article on our github.

Author

-

Software developer with over 24 years experience working with Java, Scala, .Net, JavaScript, Microservices, DDD, TDD, CI/CD, AWS, Docker, Spark

View all posts