Table of Contents

Challenges

When working in a microservices architecture, we face the problem of communication between our applications. Services must often collaborate in order to handle a request. As service instances are typically processes running on multiple machines or virtual instances, they must interact using inter-process communication.

Services can use synchronous requests/response-based communication mechanisms, such as REST or gRPC, or they can use asynchronous message-based communication mechanisms, such as AMQP. In this article, we will focus on asynchronous messaging and address the problem of maintaining message order consistency and eventual consistency.

Eventual Consistency Through Message Ordering

Preserving message ordering could be challenging when scaling out message receivers. In order to process messages concurrently, it is common to have multiple instances of a service. The throughput of the application increases when using multiple threads and service instances to concurrently process messages.

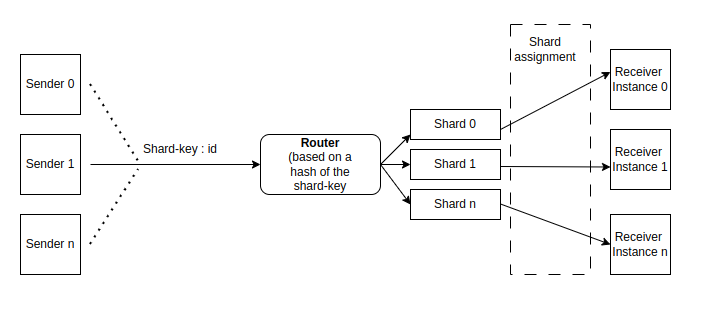

Modern message brokers such as Apache Kafka and AWS Kinesis use sharded (partitioned) channels as a common solution. A sharded channel consists of two or more shards, each of which behaves like a channel. The sender specifies a shard key in the message’s header. The message broker uses a shard key to assign the message to a particular shard/partition. Finally, the messaging broker groups together multiple instances of a receiver and treats them as the same logical receiver. The message broker assigns each shard to a single receiver.

Each event for a particular message ID is published to the same shard, which is read by a single consumer instance. As a result, these messages are guaranteed to be processed in order, ensuring eventual consistency.

Using Apache Kafka Consumer Groups

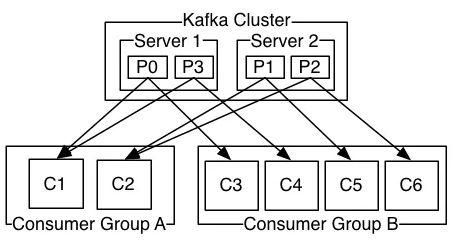

We can process events in parallel in Kafka for consumers from a topic using consumer groups. Each consumer is assigned a subset of partitions from a topic and can parallelize the processing of those events. We can utilize three primary partition strategies for producers, Round Robin Partitioning, Message Key Partitioning, and Custom Partitioning.

We will also focus on Message Key Partitioning, also known as Default Partitioner. This method is used when a message key is provided. The key is placed through a hashing function, and all messages with the same key are placed onto the same partition, preserving message order. However, we must be cautious because this strategy could lead to partitions not being evenly distributed and more active message keys having larger partitions than less active message keys. At the same time, we can assign consumers to specific consumer groups that have several partitions.

Choosing the right shard key could be challenging; we need to distribute messages evenly, and if we don’t choose the correct one, we could have some consumers with overload and others with very few messages. For instance, if in an e-commerce application, we set the product ID as a shared key, we will have this disadvantageous situation where some consumers have an overload. To solve this, we could use the user ID as a shard key, assuming that purchases are distributed equally among users.

Conclusion

In a distributed architecture, inter-process communication plays a key role. In order to increase availability, we use asynchronous messaging. A good way to design a message-based architecture is to use a messages and channels model. To scale consumer instances and keep message order, we could use sharded channels with a message key as a shard key and assign consumers to specific shards, for example, consumer groups in an Apache Kafka message system.

If you liked this article about eventual consistency through message ordering, I suggest you keep an eye on Apiumhub’s blog to read more content on software architecture and eventual consistency.