Table of Contents

Hi everyone!!

This article is based on the scenario presented in our article ‘User Story Splitting‘. If you didn’t have the chance yet to take a look at it, we encourage you to do it before continue with this article’s reading. We just want to be sure that you are not missing context but, just as a quick recap…

- …we are working in the product department of a company called ‘Frame Store’. We sell frames …

- … what we used to get from our business people is a big idea, inspirational ( sometimes not 100% realistic and rational! ) of a very nice and cool feature …

And… these are the assumptions we would like to validate:

- If the users are able to customize their menu, they will be able to find the options easily, improving performance and satisfaction.

- If the users are able to search menu options, they will be able to find the options easily, improving performance and satisfaction.

Validating these hypotheses we are seeking to reduce the risk of failure and to be sure we are giving real value to the user.

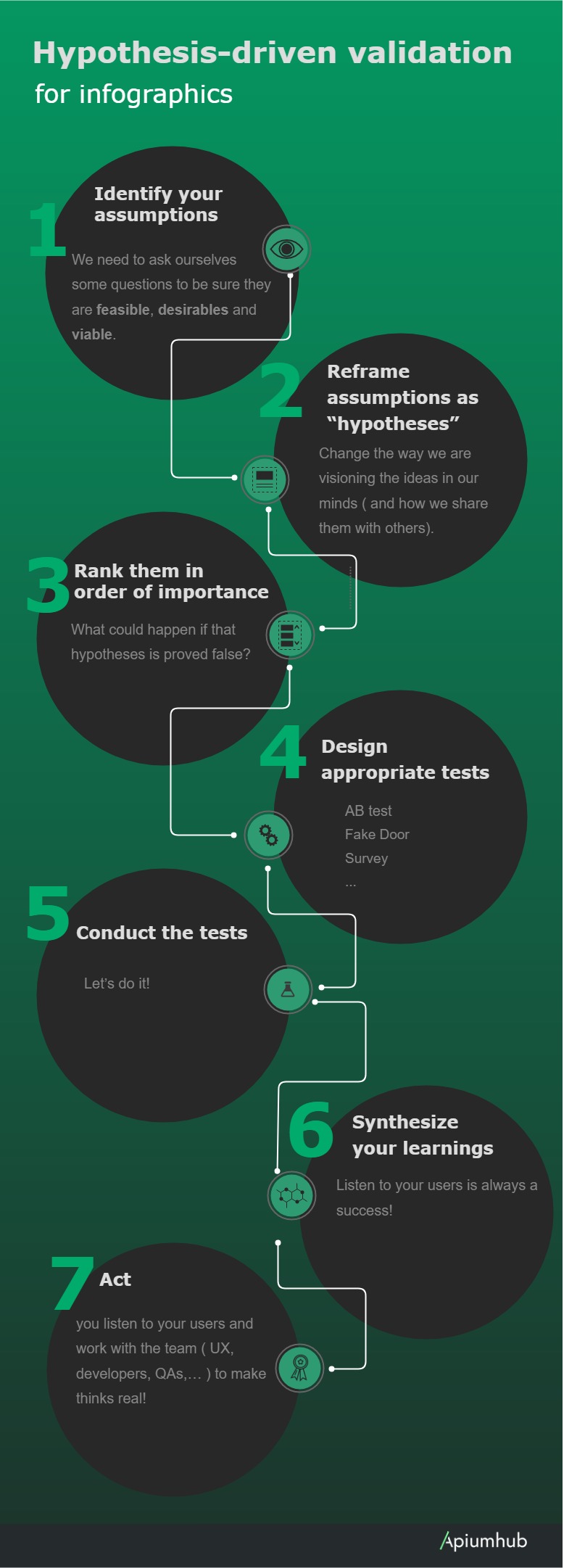

Let’s jump to the next sections where you will discover in more details how you can reduce the risks, this is ‘as simple as’ follow a hypothesis-driven validation or, what is the same, work on the hypothesis (with empiric data) before to take any decision and move forward.

Identify your assumptions

For our example, we have the following assumptions:

- If the users are able to customize their menu, they will be able to find the options easily, improving performance and satisfaction.

- If the users are able to search menu options, they will be able to find the options easily, improving performance and satisfaction.

We need to ask ourselves some questions to be sure they are feasible, desirables and viable.

Some examples of these questions could be…

- Are we facing some technological challenges that could make these hypotheses not realistic?

- Are we trying to solve a real pain point for our users?

- Does make sense for our company to improve that part of the process right now?

With this 3 questions we can have a very high level idea if our hypotheses is feasible, desirable and viable. We could iterate more in each of them, trying to go more deep on any of these characteristics. This is just a good point to start.

Reframe assumptions as “hypotheses”

This step could seems unnecessary but it’s really required to change the way we are visioning the ideas in our minds ( and how we share them with others).

Assumptions are something we take from granted, it is what it is. On the other hand, hypotheses are like a tentative assumption. We are implicitly saying that we have not proof of the certainty of the assumption.

- We believe that, if the users are able to customize their menu, they will be able to find them easily, improving performance and satisfaction.

- We believe that, if the users are able to search menu options, they will be able to find them easily, improving performance and satisfaction.

A good exercise in here is also the create ‘null hypotheses’, like:

- We believe that, if the users are able to customize their menu, they will be able to find the options in the same way than before, not improving performance and satisfaction.

- We believe that, if the users are able to search menu options, they will be able to find the options in the same way than before, not improving performance and satisfaction.

These are the ones we want to prove false.

Rank them in order of importance

One good advice in order to prioritize hypothesis is to evaluate what could happen if the hypothesis is proven false. In another words, what’s the Cost of Delay ( a concept some of you already know from Safe methodology).

What would be the impact for our app, or even higher level, for our company!

Design appropriate tests

As a good advice I would say ‘test first what has more risk‘. This could be apply to anything, do not start with the easy part just to realize that the more complex one is impossible to achieve. Let’s discard it ASAP.

If we don’t know how to make a plane fly… why we should start building the seats for the passengers? It doesn’t make sense ![]()

There are multiple ways to perform a test on the hypothesis (Qualitative and Quantitative) … we will go through few of them:

AB test

Implement a minimum valuable product with the hypotheses we need to validate. We don’t need to have the full functionality implemented, just the most important features.

If we want to validate our hypothesis ‘We believe that, if the users are able to search menu options, they will be able to find them easily, improving performance and satisfaction.‘, let’s implement a search field but that’s everything, nothing else, nothing fancy.

Let’s release it to half of our users and see how it goes. Do not spend a lot of time in something that maybe nobody want. Once we proof that our users likes it and is giving value, we will work to improve it!

Fake door

This will require even less implementation than the AB testing of the feature BUT could generate more frustration to our users. To validate our hypothesis we will…

- Add the search field to our landing page

- Let the users write the information they need to search

- Do not perform any search, the portal could prompt a message like ‘Sorry, this feature is not still available, we are working on it!’.

- Send to our analytics system how many people is actually using the feature.

As I said before, I believe that this kind of communication need to be handle appropriately to reduce frustration from the users ( some companies uses messages like ‘sorry, this feature is not available in your country yet’… a kind of white lie but could be less harming ).

This validation process could be executed inside an AB test, just replacing the MVP implementation with this Fate Door.

Surveys

Let’s ask to our users and see what’s the outcome. What is important in here is to not ask direct questions where we are driving the users to the answer we expect is the correct one.

During all this years, I heard like a hundred times ‘remember the yellow Walkman!’ … how can I forget about it!! That example teach us what we don’t have to ask but, the real value is on ‘what do we have to ask?’. It took me few days to come with just few ‘none interfering’ questions!

As advice, try to formulate questions following :

- Do you believe that …

- How do you feel when…

Try to avoid yes/no answers. I would always prefer to go with a scale from ‘less likely’ to ‘very likely’ or similar.

Take in consideration that our product needs to be designed and implemented considering hypotheses validation since day 1. We can not delay the integration of an AB test framework to the last one of our implementations, this needs to be prioritize high!

Conduct the tests

Let’s do it! ( but one at a time! ![]() )

)

You can user tools like Firebase to perform your AB. Let’s place in there the segmentation of your users you want to move to the test and wait until the data you receive is significative enough ( some tools like Firebase tells it itself).

How long will take? Depends on the traffic you have on that part of the portal/app. Changes on the landing page will give you significative data sooner that changes on some detailed page, like users profiles or any other with less traffic.

About surveys, pick well your candidates to have a realistic representation of your users. Imagine that more than 75% of our users in the Frame Store Portal are people older than 50 years. Our candidates need to represents this distribution so, do not pick a lot of 20-30 years old candidates!

Synthesize your learnings

We need to be openminded at this stage of the hypothesis validation. Maybe, the hypothesis is proved false. This should never be consider a mistake! this is a success! We where able to save time and money for the company stopping of pursuing something that has no value.

A lot of companies failed because they were unable to validate hypotheses but not just that. I saw cases where, after designed and conducted the appropriate test, they failed reading the results. How could that be possible?? that is just because people is too committed to the assumption that they do not accept that it could be proved false. People sold the idea to upper management before prove it right and now,… ‘Wow… I can not go back and tell them that what I thought was not true, that I was wrong!’. This is a big mistake, the biggest one we can commit at this point. We spend time and money to validate an hypothesis and now, we will spend time implementing it even if it not useful for anybody!. Crazy stuff…

Be openminded, change your thought to ‘Wow… luckily we proved that our assumption where false before move forward with it. Now we know more about our users needs! we can formulate new assumptions!’. Nobody failed!

Act

Implementation with the certainty of giving value to your users is the best feeling ever! you listen to them and work with the team ( UX, developers, QAs,… ) to make thinks real!

Is there a small chance of failure ( will be almost impossible to reduce the risk to 0! ) but nothing if you compare it with the changes of failure when you put your team to work implementing an assumption that you didn’t validate!

Conclusions

Hope this article gives you some clues to improve your hypothesis validation process. Our advice…validate, validate and … iterate!

We wish you all the best on this long but very fulfilling process!