Table of Contents

It’s all about processes

“Making software is like making cars”

This is a recurring phrase that I sometimes debate with acquaintances. We are engineers, so it should be the same, but IT people mostly answer with a no. Software evolves, it is not something static like a car.

But let’s think again about the process of creating a car: when a brand designs a new model, from the concepts to the final exploded drawing it goes through several phases, where each part is refined and designed. If we look at the image of the half-built car, we can see that the cars have circular holes throughout the structure. What are they used for? In some cases they lighten the overall weight of the vehicle or facilitate the passage of cables, but in others these holes are used to facilitate access to the welding robots, or so that operators can screw or access areas during assembly.

In essence, when a car brand designs a car, it is not only in terms of safety a car, it does so not only in terms of safety, regulations, power or aesthetics, regulations, power or aesthetics, but also in terms of how it also designs it in terms of how it will be manufactured and assembled. and assembled. That is, in the process of manufacturing process. Let us now turn to the sentence at the beginning. If we think that a car model is equivalent to our software and the cars manufactured are the releases, then we can ask ourselves a question about this analogy:

Do we manufacture/design software based not only on the end product, but also on the process of manufacturing that software?

Answer: Not always, although we should.

If we transfer the analogy to the software world, we see that software development companies may have multiple teams working on a piece of software, and that the software is developed in phases or pieces that are then integrated with each other. We know that a perfectly developed module or library with 100% coverage can have problems when working with other perfect software, because of errors in integration due to miscommunication or misinterpretation of documentation. This is a fairly common case in the software world: many errors are process-related. And those bugs affect the perception of quality by the customer, who only sees the bug and does not take into account what effort has been put into testing. We also know that a change in the development and release process can have a very big impact on the quality of the software, without testing. For example, we know that working with code versioning, such as git, impacts the quality of the product compared to submitting our code via slack.

A real-world case

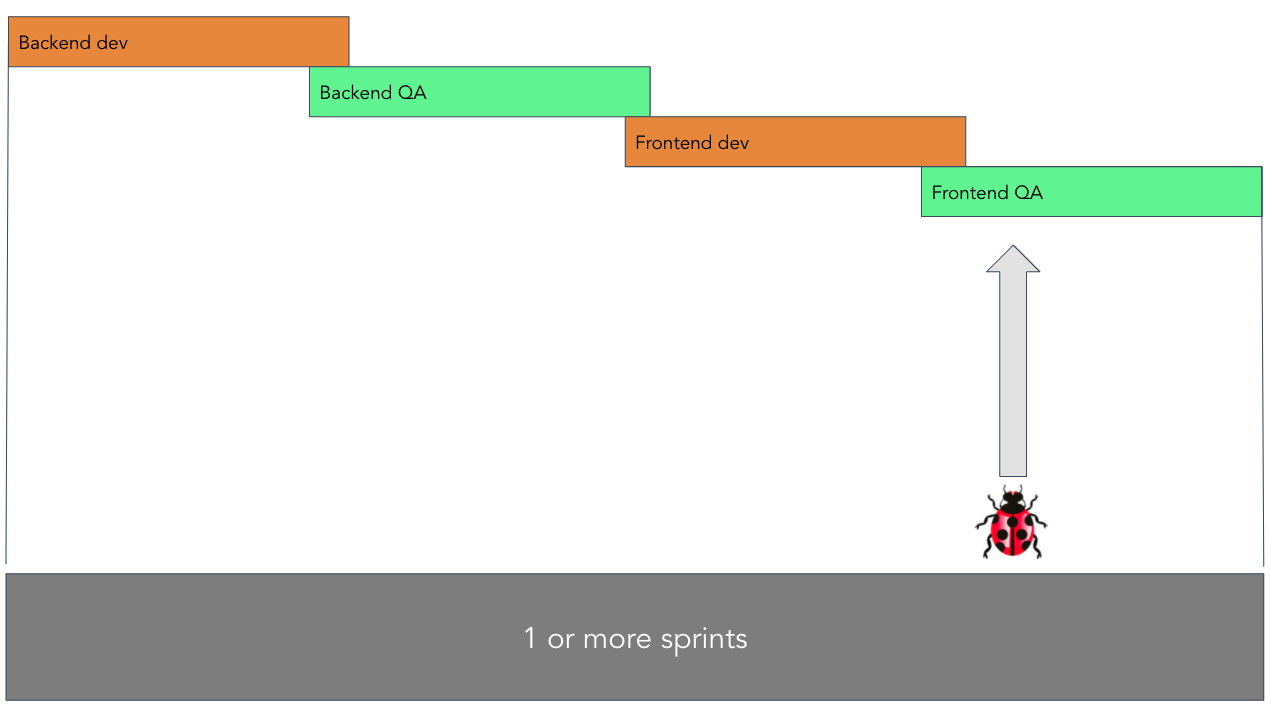

Let’s think about an example that we can see in many companies. Let’s imagine a heterogeneous Agile team, with Backends, Frontends and one or two QAs, a scrum master or PO and maybe some DevOps. Nothing out of the ordinary. In the retros they complain about the lack of focus during the sprints. That they have several errors that force to stop and change context to fix bugs even with some sprint difference, many due to the internal communication of the team.

This, as shown in the picture, is quite common. The backend does its task and when finished the QA tests and automates testing of that task. It sets the task to ‘done’ and then the frontend comes into the frontend comes into play, which develops consuming the API that the backend has created. Then, the frontend QA tests and automates tests on the frontend dev task. This process can take more than one sprint and that means that the means that the BE is already on other tasks.

So what happens if the QA finds a bug in the Frontend that uncovers a bug?

The error can be of different natures: it can be that the context given by the PO was not complete, it can be an error not found in the backend or a divergence between what FE understands and what BE thinks. As always in this case, there is talk of a lack of documentation and that the whole team must review tasks that in theory were already closed.

The proposed process change is quite simple: document APIs with OpenAPI and provide/update mockups of these APIs before doing backend tasks. Creating documentation per endpoint is easy and does not need to be done for those where we are not going to work at the time. It is not all or nothing. Creating mocks from documentation is easy. There are a number of examples on DockerHub.

What do we gain from this?

- BEs have functional documentation with examples to test, which is also a unique source of truth.

- BE QAs have mockups for programming tests before there is code, and the examples in the documentation are used to generate automatic tests. In addition, BDD makes sense here since we can formulate tests before the task is completed by the BEs, and these tests can serve as acceptance tests.

- FEs have a common documentation to consult and test, but since they also have mockups they can already start their tasks since they can already call functional urls even if mocked up.

- FE QAs can write their tests much earlier.

- Even DevOps can test pipelines with the mockups, think of ephemeral environments for testing, etc…

But the main benefit is that the whole team can focus on one task and not vary the focus over time.

A process change can lead to a change in product quality through helping the team focus on what is important and through reducing uncertainty by having a single source of truth. And that could be the first conclusion of this article: understanding, improving and monitoring processes has a critical impact on product quality. But it also leaves us with a question: What then is the role of QA engineers?

Analysing processes

We have known for a long time that testing is not synonymous with quality. You can test a system 100 times and find 100 different errors, simply because there are too many shortcomings in the development processes (bad code, too many dependencies, incorrect configuration management, poor development environment, ….). And after testing 100 times, the system will not have improved its quality. Testing does not increase quality! Think of them as a safety net that catches (some) bugs.

There are many types of bugs, which can be classified into typologies and which are found and fixed with known techniques, but they can be summarised in code bugs, configuration bugs and data bugs.

There is also another typology of errors related to errors in the communication between equipment or in poorly defined processes. These are the most dangerous, because they are unpredictable. And they are the ones that can reach production. If, for example, someone touches something in a configuration of a test environment and does not communicate it to anyone, it could be the case that this configuration is missing in production. And it is very difficult to model a scenario to build a test that foresees this situation. Only the standardisation of processes can avoid this. And to analyse and improve these processes, the ideal is to analyse them cyclically.

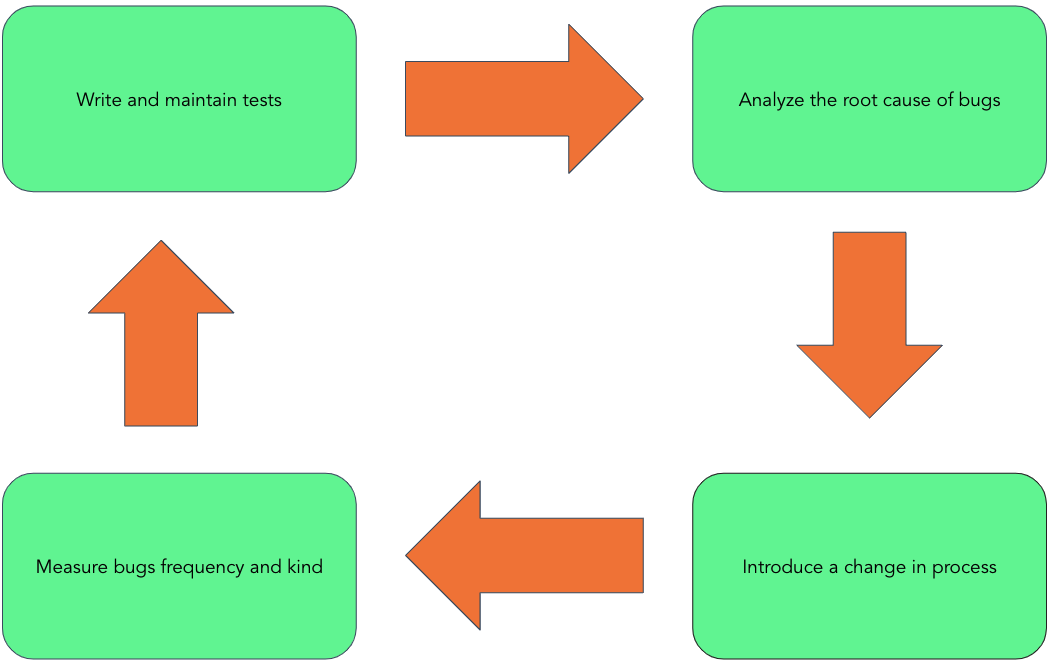

The main idea is to take advantage of our tests to detect bugs, and together with the information we have from other bugs, establish a classification of the typologies of bugs and base ourselves on that to improve the processes. Once we introduce new processes, we will analyse their impact by measuring the same typology and frequency of new bugs.

On this basis, it is important that the analysis of bugs is objective and in-depth. Do not just look at “who introduced the bug” but try to understand the chain of events that led to the bug.

Some points to be made:

- Analyse your processes using test results and bug typologies.

- Analyse production bugs first before those detected by your tests (survivor bias).

- Change your processes one at a time if you plan to measure them.

- Check if a process suits your team, there are no silver bullets.

- Understand your context and use mockups to control it.

- Don’t test what you can’t change (ownership).

- Prioritise processes that are standard

- Prioritise processes that can be automated.

- Prioritise processes that decouple your team from others.

Points to avoid:

- Don’t ignore the needs of your team when adopting processes.

- Don’t try to test impossible scenarios.

- If something cannot be tested, don’t put it in the “tested” column.

- As QA you are not obliged to put something as tested. Share the responsibility with your team.

Conclusion

At one point or another all companies producing software will adapt to devops methodologies to deliver more frequently. In this sense, test systems, especially the core ones, will have to evolve with the industry and adapt, and along with them, QA engineers will have to adopt new roles in companies that will be more focused on being providers of quality culture and of tools and processes so that the teams where they act can deliver more frequently and with more quality.

In that future, on the other hand, I struggle to see how a QA who takes days to write a long end-to-end test to test software that is constantly changing and whose features have been in production since the moment it was pushed to the production branch. And it’s harder to see how the speed of writing those tests can be matched with the completeness needed to provide assurance.

The software world is changing. Let’s try to make this new world a better place.

Author

-

Software Engineer with more than 16 years of experience in the Quality assurance software field. A passionate and strong defender of automation and code, I have experience creating QA automation frameworks, teams and departments since 2010.

View all posts