Table of Contents

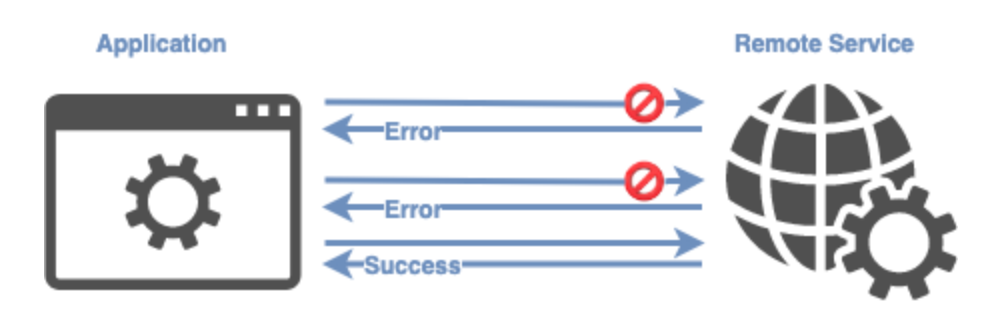

Today I would like to talk about Retry pattern. In distributed applications, where constant communications are made between services and/or external resources, temporary or transient failures (transient failures) can occur when interacting with these environments. These failures can be caused by different reasons, among the most common are the momentary losses of connection to the network, temporarily unavailable services, exceeded response times, etc.

Normally these errors are solved automatically and in a short period of time so that if the service or resource is invoked, it immediately responds correctly. A classic example of a transient error is the failure to connect to the database due to a peak of simultaneous connections that exceed the maximum number allowed per configuration.

However, despite being rare errors, these failures must be managed correctly by the application to minimize the impact on it. A possible solution to this problem is the application of the Retry pattern.

Retry Pattern

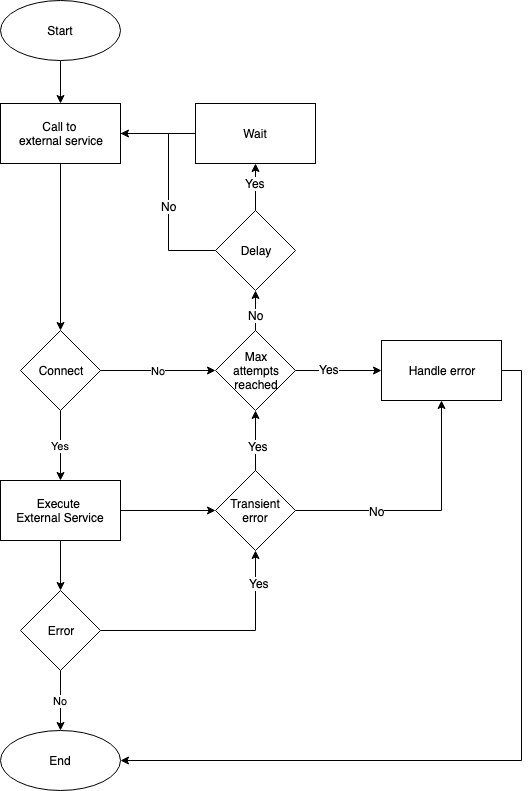

The Retry pattern is known as stability pattern and as its name indicates, it is about retrying an operation that has failed. Actually, it is a very simplistic definition and it is necessary to add that depending on the type of error detected and/or the number of attempts, various actions can be carried out:

- Retry: If the error indicates that it is a temporary failure or an atypical failure, the application can retry the same operation immediately since the same error will probably not occur again.

- Retry after a waiting time: If the error has occurred due to a network connection problem or a peak of requests to the service, it may be prudent to allow some time before attempting to perform the operation again.

- Cancel: If the error indicates that we are not facing a temporary failure, the operation should be canceled and the error reported or managed properly.

These actions can be combined to create a retry policy adjusted to the needs of our application.

This would be an example of a simple implementation in Kotlin where only the number of failed attempts is taken into account and there is a waiting time between each retry:

fun run(action: () -> T): T {

return try {

action.invoke()

} catch (e: Exception) {

lastFailure = LocalDateTime.now()

retry(action)

}

}

@Throws(RuntimeException::class)

private fun retry(action: () -> T): T {

retryCounter = 1

while (retryCounter < maxRetries) {

if (isTimerExpired) {

try {

return action.invoke()

} catch (ex: Exception) {

retryCounter++

if (retryCounter >= maxRetries) {

break

}

}

}

}

throw RuntimeException("Command fails on all retries")

}

Of course, the implementations can be much more sophisticated. We can have, for example, an implementation that starts with a policy of consecutive retries since it is normal for the service to recover quickly. If after a number of consecutive retries the error continues, we can move on to include a prudential waiting period between retries and finally, if the service still does not recover we can proceed to cancel the operation.

The complexity of the implementation must respond to the real needs of our application and business requirements.

There are libraries that implement the Retry pattern in a simple way, such as Spring Retry.

@Configuration

@EnableRetry

class Application {

@Bean

fun service(): Service {

return Service()

}

}

@Service

class Service {

@Retryable(maxAttempts = 2, include = [RemoteAccessException::class])

fun service() {

// ... do something

logger.info("Success calling external service")

}

@Recover

fun recover(e: RemoteAccessException) {

// ... do something when call to service fails

logger.error("Recover for external service")

}

}

As we can see the implementation through this library is very simple. In the example, we can see that the first thing to do is configure the application with the annotation @EnableRetry.

Next, we add the @Retryable annotation with which the method that is going to be ‘re-attempted’ is indicated in case of error. The @Recover annotation indicates where the execution will continue in case the maximum number of attempts is exceeded (maxAttempts = 2) when the error is of the RemoteAccessException type.

You can find the complete example on GitHub ( link is at the end of the article ).

We can check the flow of the pattern in the console output. The execution was successful until it found a transient error, at that time it has re-attempted the operation twice as it was specified in the configuration and when the same error continues it has gone out through the recovery method.

INFO 81668 --- [ Logge : RetryService ] - Calling external service...

INFO 81668 --- [ Logge : RetryService ] - Success calling external service

INFO 81668 --- [ Logge : RetryService ] - Calling external service...

INFO 81668 --- [ Logge : RetryService ] - Success calling external service

INFO 81668 --- [ Logge : RetryService ] - Calling external service...

INFO 81668 --- [ Logge : RetryService ] - Calling external service...

ERROR 81668 --- [Logger : RetryService ] - Recover outputThis pattern works very well when errors are transient, sporadic and are solved in a later call, but a series of considerations must be taken into account when applying it:

- The type of error: it must be an error that tells us that it can be recovered quickly.

- The criticality of the error: retrying the operation can negatively influence the performance of the application. In some situations, it is more optimal to manage the error and ask the user to decide if he wants to retry the operation.

- The policy of retries: a policy of continuous retries of the operation, especially without waiting times, could worsen the status of the remote service.

- Collateral effects: If the operation is not idempotent, it can not be guaranteed that retrying will lead to the expected result.

It is not advisable to use this pattern in case of:

- Managing non-transient errors that are not related to connection or service failures (such as business logic errors).

- The long-lasting errors. The waiting time and the necessary resources are too high. For these cases, there are solutions such as the application of the Circuit Breaker pattern that we will discuss in another article.

I would like to highlight that it is highly recommended to keep a record of failed operations as it is very useful information to help correctly size the infrastructure of a project and find recurring errors and the silenced ones by the application’s error management.

You can find the complete examples discussed in this article in our GitHub.

Author

-

Software developer with over 24 years experience working with Java, Scala, .Net, JavaScript, Microservices, DDD, TDD, CI/CD, AWS, Docker, Spark

View all posts