Table of Contents

The concept of a heavily segregated logical design in order to achieve “Almost-Infinite” scalability systems is stated in the illuminating paper “Life beyond Distributed Transactions: an Apostate’s Opinion” (Pat Helland) as well as in “Implementing Domain Driven Design” by Vaughn Vernon’s.

In this article, I’ll try to give a few insights from my perspective, regarding the concept of “Almost-Infinite Scalability” and its strong relationship with logical design.

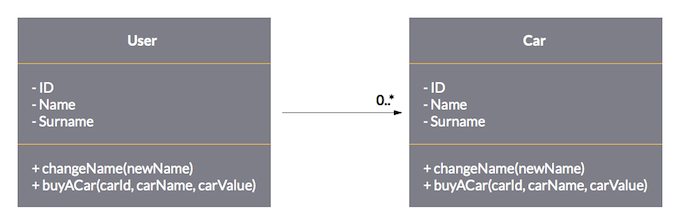

According to my experience, one of the hardest parts to put in practice in DDD has always been the design of aggregate roots boundaries. Typically, in the early years of DDD, we had big clusters of entities, all starting from a “central” one. Each of those entities were related to other ones by means of direct and unidirectionally navigable associations.

The ORM takes care of fetching related entities usually by lazy-loading them. This mechanism was used between aggregate roots themselves – although I’ll show that it wasn’t so beneficial to the model.

One justification was to easily support reads in the same model used for writing, which was another very strong “hidden” assumption. Everything was simply well supported by all the tools we had in place. ORMs, proactive and imperative technologies and relational (transactional) databases, all seemed to support this kind of design so well that the expressing concerns of “transactionality by design” was simply perceived as overkill.

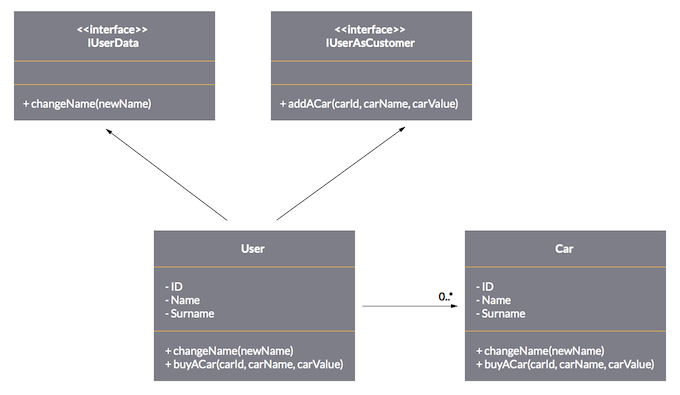

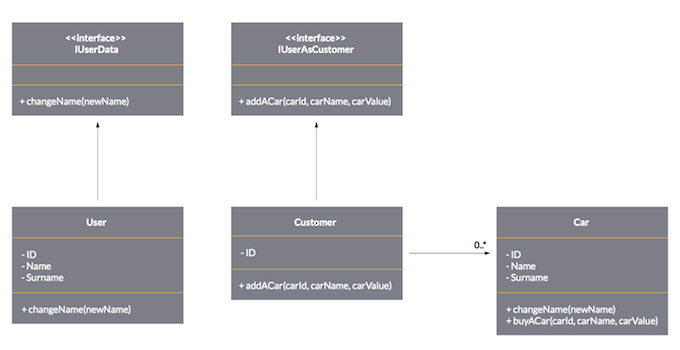

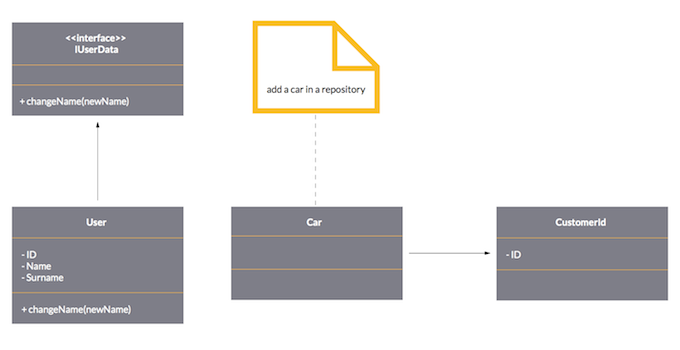

The guidelines discussed by Vernon in the form of 4 “rules of thumb” lead to a completely different design perspective. They are logically justified by OO fundamentals like the Single Responsibility Principle, Interface Segregation Principle and so on.

Let’s look at the “maths”:

As seen above, by using a consecutive application of a set of well known OO principles, we inherently achieve an aggregate partitioning that complies with Vernon’s “rules”.

ARCHITECTURAL BENEFITS OF A SEGRATED MODEL

- Explicit transactional scope: by segregating the domain, each domain is likely to be fully responsible of the transactions that operates on itself. That is, there is a correspondence between a method on the aggregate root and the operational transaction on itself. As transactionality is a mechanism guided by the persistence component, in case of relational technologies, you can still benefit from using SQL Transactions. But in some non relational database, like for example MongoDB, the only “transaction” mechanism is the ACID property when saving a single “document”, which should correspond to an aggregate state.

- Design portability: having explicit transactional boundaries makes the design portable. In a hypothetical transition to a distributed system, it could become convenient to introduce eventual consistency between one strong transactional boundary and another. Think for example about possible migrations to message-driven technologies.

- Cohesiveness: from the point of view of cohesion, being the model in its normal form, a change is more likely to have impact only on one domain, decoupled from the rest of aggregates.

- Simplicity: the maintenance of a single, simple, cohesive and decoupled module is always simpler than the same in a big network of classes. To me, this concept alone justifies the domain segregation approach.

- Fetching performance: being a model unrelated to other domains, it’s feasible to use eager fetching strategies by default. This should save from the typical pitfalls of having the database heavily shot from “hidden” lazy-loads. In non-relational database, where often lazy-loading is nonsense, you can still benefit from avoiding big documents (that is a huge I/O problem) and massive object mappings.

- Memory consumption: the property of cohesiveness minimizes the quantity of properties/embedded value objects to the minimum you need to accomplish the transaction itself.

- Collaborative domains: due to the reduced amount of data in every transactional boundary, conflicts from intensive collaboration on the same data are made less probable.

- Reading performance: by following the same logic, the extraction of some kind of separated model for reading fits naturally as eager read derivation (being it either a read model, some materialized views, or even CQRS). Even in case of avoiding eager read derivation techniques, there’s no “black magic” in deriving the read side, and therefore, the danger of overshooting the database is reduced.

- Decoupling from the underlying mechanisms: given the preceding properties, the role of an ORM (generally more of Data Mappers) is reduced to a minimum. Ideally, the model does not have to change in case of, for instance, a migration from the use of an ORM to a raw-file persistency. Only the implementation of the persistence component does.

- Testability: one important consequence of the decoupling from the underlying mechanism, is that domains are easier to test. That is because there’s no need to build big trees of entities to have complete fixtures, and also because of the enhanced persistence ignorance.

SCALABILITY

The idea behind the benefits of scalability is that, if we want to distribute a big, navigable tree of objects, we probably need transaction boundaries to embrace the whole model interaction. Of course, technologies that allow distributed transaction exist. But the problem is that distributed transaction are logically a big impediment to scalability.

Actually, in a distributed system, each entity could stay on different machines. That implies transactional locks on different places with reliability and performance problems. This effect is made even more apparent when considering distributed elastic systems where the effects of massive distributed transactions are even less predictable. It’s true that it’s possible to imagine scale-aware Data Mappers that would preserve model “transparent” navigability.

My whole point though, is that tool-based navigability between aggregate roots is a mistake per-se. It does not give any benefit out of the simplicity to think about it from a relational perspective, but it comes with the price of renouncing to all the benefits mentioned above.

What I really like of domains segregation, from the scalability and transactions management point of view, is that it models transactions in a way that enables system distribution by (logical) design. In other words, everything is in the domain.

LESSONS LEARNED FROM NON-RELATIONAL TECHNOLOGIES

The use of mechanisms like automatic relationship population in data mappers or similarly, the use of persistency “hooks” in non-relational technologies is often a source of big problems in our experience. Those are attempts to use relational modelling in non-relational technologies, which is a modelling error in the first place.

DDD is all about making domains and contexts at the center of the application, so the in-memory relational approach misses the whole point. Furthermore, it generates the same problems of lazy-loading (database overshooting), made more severe by the fact that the majority of NRDBMSs is not as good as RDBMSs when it comes to responding to big bunches of queries at a time.

In this sense, Non Relational technologies are a very good chance to put in practice domain normalization, as they usually reduce the gap between a carefully designed in-memory domain model and its persistent representation.

CONTACT POINTS WITH FUNCTIONAL PROGRAMMING

As we have been witnessing more and more at Apiumhub, functional programming principles often aren’t in contrast at all with the classical ones of Object Oriented design. It’s important for us to stress the concept that small focused domains carry an important feature: composability over navigability, as opposed to the typical approach of “nesting aggregates” that comes from more classical approaches in DDD.

Composability might be the most highlighted property that comes from functional programming. In this sense, application transactions can be seen as a (monadic) composition between domain services.

DRAWBACKS OF “EXTREME” SEGREGATION

Disclaimer: I’m writing about the logical segregation we see above. I won’t map it to physical units of deployment here, nor will I go through the “micro services” debate in this article.

In our experience, the drawbacks in segregating “too much”, are mainly:

- The “proliferation of domains”

- The proliferation of “boilerplate” code in connecting domains

While the first point can truly be a problem, even for experienced teams, it is often only up front perceived like proliferation of modules. Even if I acknowledge that it’s not easy to catch abstractions in the first place, it obviously calls for an iterative process, and having smaller modules normally helps it.

Another fact is that, if there are “too many domains”, it probably is because of some duplication in business rules modeling, or because it calls for a new bounded context.

Nevertheless, it’s true that, very often, developers perceive a problem when logical modularity forces to a proliferation of physical modules.

Regarding the second point, we are convinced that there are better ways to express domain services, removing lots of boilerplate code and physical files by using higher levels of abstraction in connecting code. This is especially relevant in technologies like Scala language.

Author

-

Christian Ciceri is a software architect and cofounder at Apiumhub, a software development company known for software architecture excellence. He began his professional career with a specific interest in object-oriented design issues, with deep studies in code-level and architectural-level design patterns and techniques. He is a former practitioner of Agile methodologies, particularly eXtreme programming, with experience in practices like TDD, continuous integration, build pipelines, and evolutionary design. He has always aimed for widespread technological knowledge; that’s why he has been exploring a huge range of technologies and architectural styles, including Java, .NET, dynamic languages, pure scripting languages, native C++ application development, classical layering, domain-centric, classical SOA, and enterprise service buses. In his own words: “A software architect should create a working ecosystem that allow steams to have scalable, predictable, and cheaper production. Christian is a public speaker and author of the book “Software Architecture Metrics”, which he co-authored together with Neal Ford, Eoion Woods, Andrew Harmel-Law, Dave Farley, Carola Lilienthal, Michael Keeling, Alexander von Zitzewitz, Joao Rosa, Rene Weiß.

View all posts