Table of Contents

Introduction

A lot of companies, want to apply Agile Methodologies in their projects, but at the beginning of most agile coaching projects, we find that our client applications are not properly covered with any kind of automated tests.

In most cases, the organization has no plans to throw away those codebases and start from scratch, which means we need to design a plan to address the evergreen issue of quality in the legacy code.

How do successful teams deal with legacy code to stabilize quality and improve overall product delivery? This will be the main subject of this article.

When we work with legacy code

We call legacy code any code that is not covered by automated tests. As hard as it sounds, you might realize that you are currently writing legacy code for the next several months.

Even if it’s you who writes it, next month, no one will remember exactly what this piece of code was created for, only that we can minimize if we have a great unit, integration, and acceptance tests.

In an agile environment, tests are the most important documentation. I have seen in some organizations where more than three critical bugs were reported per developer team per week, which destroys any possibility of following a plan in the sprint.

The developers have the idea that Fixing bugs is boring, exhausting, and depressing. Describing scenarios and writing automated tests before developing a user story ensures that everyone has the same vision of what needs to be developed. These activities not only minimize the need to write new bugs due to rework or unconsidered scenarios but also and it is the most important goal, it’s seen as a necessary part of developing robust applications.

When we work with legacy code, the most valuable kinds of tests are acceptance tests (black-box tests). We don’t need to have a well-written code; there is no dependency to start writing automated tests against our application interface. When we want to start writing unit/integration tests: the code will probably be coupled, not compatible with SOLID principles. Writing our first unit test might be a pain in the head.

We not only write acceptance tests, but we also take advantage of this safety network created with the acceptance tests, and we start refactoring our codebase.

This refactor should be focused on enabling the writing of unit and integration tests or the rewriting of highly buggy components.

The focus is to not only beautify or simplify reading the code but also gain results from this investment! We decided to write automated tests because we believe it’s going to bring a benefit: fewer bugs reported, leading to less money lost for our organization because of those bugs.

What strategy we should take to select a part of code for the writing tests?

In some bibliography we can find a little guide to help you decide it:

| Test type | Description | Development time | Execution time | Bugs covered |

|---|---|---|---|---|

| Unit test | Covers a method in a class, using mocks to fake the behavior of other classes/methods used from the tested method. | LOW: Minutes. | LOW | LOW. Only effective to detect bugs when someone changes the method code without considering all the possible cases. |

| Integration test | Covers a method in a class, without mocking. It actually calls the other objects, and if it’s required it loads fixtures (fake data) to the data storage. | MEDIUM: Longer than a unit, perhaps over an hour. | MEDIUM | MEDIUM. Effective to detect code in a broader range than unit test. |

| Automated acceptance test | Same test a user would do against our application, but performed by a machine. | HIGH: Depending of the technology, maybe over an hour per scenario. | HIGH | HIGH. Will detect any bug or problem. |

At this point, you are probably ready to work on the plan to test our legacy code. Here are some steps you shouldn’t skip.

Step 1: Choose your technological stack

What tools, programming language, or frameworks are you going to use to write your tests? This is the basic architecture of your tests and, as such, it will be really hard to change if you make the wrong choice. At this point, I suggest that you involve your Chief Technology Officer (CTO) or technical leaders to make this decision. It’s important to have the right people on board.

Step 2: Prepare a continuous integration suite

Our tests should be executed after you commit to your continuous integration (CI) tool. The execution of all our tests should not take more than 10 to 20 minutes or minimum time for developers to perform a code review in a commit related to a small user story. These tests should be written as independently as possible. They should not be fragile; the setup, tear down, and the test logic itself should be properly designed to ensure that different tests running simultaneously won’t give false alerts. We should be ready to parallelize the execution.

Step 3: Define priority levels for your applications

The priority must be agreed upon across the whole organization (this point is very important). Try to keep it as simple as possible. Ideally, you will have only three levels of priorities (High (P1), Medium (P2), Low (P3)).

For example:

High-priority incidents must match at least one of these conditions:

– System is down

– System seriously damaged

In this case, we will put all our energy into this incident at any time and will not stop until we have solved the incident and eventually fixed the code bug that is causing this problem.

Medium impact. The application can work with limited functionality:

- System damaged

In this case, we will plan this bug to be fixed during the next sprint. Will be prioritized with the rest of the user stories

Low impact. The application works properly; however, there is no esthetical or not critical defect:

- System slightly affected

This case will be fixed eventually, at some point according to our bugs policy

Every party involved must agree on this list. You should also include real examples for the different levels of priority.

What going to be automating? Incidents categorized as high-priority incidents or components that frequently changes in our application, these groups are clear candidates for automation.

Step 4: List your product features

Define high-level features and assign priorities to them. If a feature is assigned P1, go deeper into it. Start splitting up functionalities or creating new functionalities inside the P1 feature as user stories. Designate a priority to each of those user stories.

If a feature is P2, but it is a frequently changed part of your application, name the feature P2UP. For those P2UPs, write the user stories contained in that feature. Again, assign priorities to each user story.

Any feature with a P1 or P2UP priority is the one for which you will write automated tests. At this point, if you plan to run regression tests (manually) before release, you should describe all the scenarios. If you don’t plan to do it, you can describe the scenarios as part of the automation of every user story.

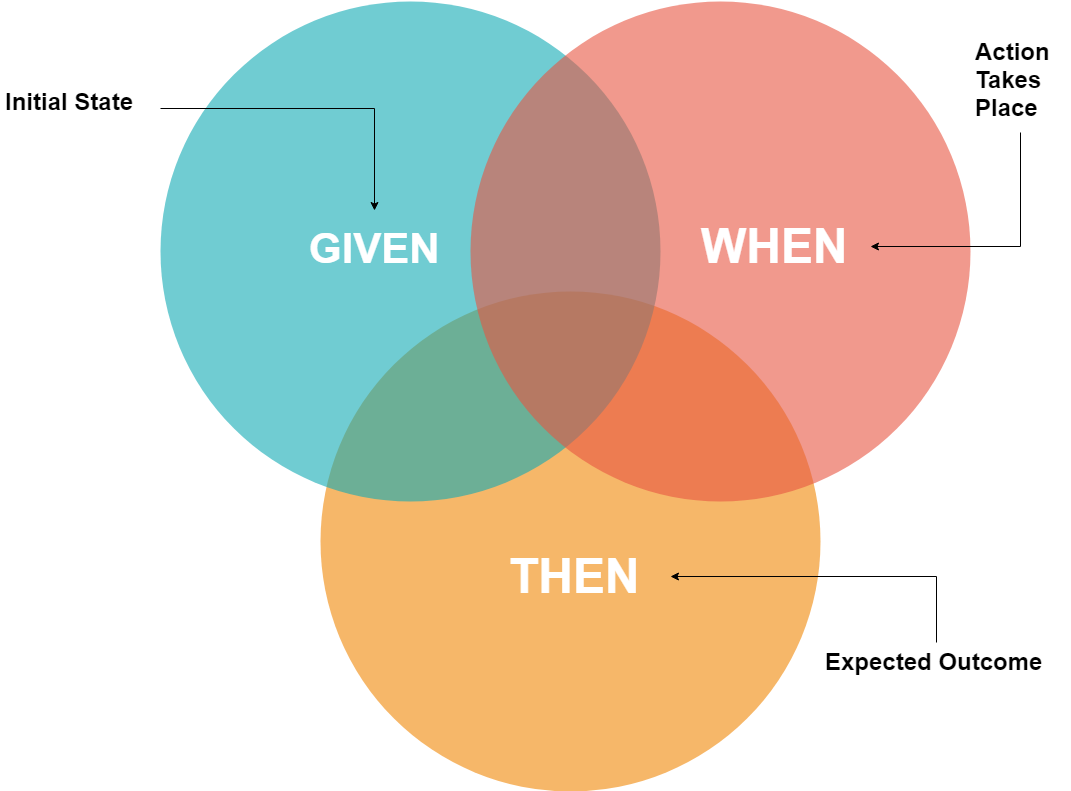

Step 5: Develop acceptance tests

We are not going to target initially to a specific percentage of code coverage in terms of unit or integration testing. Our initial focus is to cover 100 percent of P1 automated and 100 percent of P2UP. The best way to write your automated acceptance tests is by using any implementation of Gherkin, although there are implementations in many programming languages.

At this stage, you should have a backlog of test automation already prioritized. Remember that it’s a live backlog, because every new feature that you write must be assigned a priority.

If a new user story is P1 or P2UP, it should also be automated and if time permits, should automate the rest of the test scenarios.

Each team define how to realize work in a sprint, ideally, all team members should dedicate time every sprint to automating some tests. However, the one person who works on automated testing is burdened with the responsibility of ensuring product quality. This usually leads to worse results.

As soon as you decide to write automated tests, start tracking the impact you make with your work.

Below are some correlations that are especially interesting to check its impact:

- Between tests executed before every release and new P2 bugs reported

- Between automated acceptance tests and end-of-sprint heart attacks

- Between tests executed before every release and percentage of time dedicated every sprint to developing new features

- Between unit/integration tests and bugs detected in your CI suite

Write unit or integration tests

Once you have created a safety net with your automated acceptance tests, you can refactor the buggiest parts of your application. At this point you can start grow your automation code.

Achieving 100 percent code coverage with integration/unit tests is highly complex in legacy applications and not always worth the effort.

The main goal is to have focus on covering the functionalities with automated acceptance tests and write only unit/integration tests for those parts of the application that you change frequently.

In general, developers aim to achieve 100 percent coverage in unit/integration. However, depending on the size of your application, and the present circumstances, it’s not worth dedicating time to write those tests for noncritical parts or parts that are never changed. It’s smarter to write unit/integration tests for those parts of the application that are critical and are continuously changing, which takes us to user stories defined as P1 or P2UP.

Conclusion

When we come across Legacy Code, we must design a plan that guarantees the quality of that code, and we can only guarantee that if we cover it with automated TESTs. To define the parts of the code that we are going to automate, we must first divide our application into its functionalities and establish a priority for them. For the moment it would be sufficient to guarantee the code coverage of the functionalities categorized as the highest priority or the functionalities that include components that change frequently, since in most cases we will not have time to achieve 100% coverage of all our code.

Author

-

I am a Computer Engineer by training, with more than 20 years of experience working in the IT sector, specifically in the entire life cycle of a software, acquired in national and multinational companies, from different sectors.

View all posts

One Comment

Lahn Hua

hank you so much for putting this awesome article together.